As someone who’s spent years optimizing data storage performance, I’m genuinely impressed by TigerBeetle’s bold architectural choices:

https://lnkd.in/eS3bfHHQ

🎯 Single-core design for maximum throughput

Instead of fighting contention with complex locking, they funnel all writes through one optimized core. For high-contention financial workloads (think central bank switches processing millions of transactions), this eliminates the bottleneck that kills most distributed systems.

🧠 Zero dynamic memory allocation after startup

This is the boldest choice of all – TigerBeetle calculates ALL memory needs at compile-time and never allocates again. Fixed connection pools, fixed message sizes, bounded everything. The result? Predictable performance, no GC pauses, and bulletproof overload handling. When your database can’t grow memory usage, it can’t crash from memory exhaustion.

⚡ Hardware-friendly optimizations

Fixed-size, cache-aligned data structures (128 bytes, hello M1!)

Extensive batching and IO parallelization

Zero-copy operations with direct struct casting

Built specifically for the realities of modern CPU caches

🛡️ Storage fault tolerance that actually works

Most databases assume disks work perfectly. TigerBeetle assumes they don’t – handling corruption, torn writes, and even total data loss scenarios. Their browser simulator can handle 8-9% corruption rates and keep running!

🔬 Testing methodology is next-level

They built a deterministic simulator (VOPR) that compresses months of real-world testing into hours. Plus they paid for a full Jepsen audit – and passed with Strong Serializability intact… this is very rare especially for 0.16.x version.

https://lnkd.in/eJhsz5YH

The Apache license and focus on double-entry accounting makes this perfect for fintech, but the performance principles apply broadly.

Sometimes the best performance comes from embracing constraints rather than fighting them. TigerBeetle proves this beautifully.

Check out their static allocation deep-dive: https://lnkd.in/e43_4yiA

Or even better this presentation : https://lnkd.in/eixpKWsc

https://www.linkedin.com/

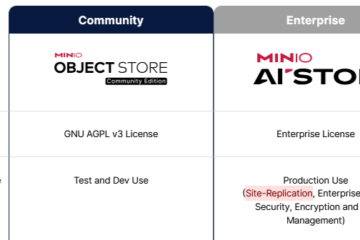

The Hidden Costs of Using HDDs in On-Premises MinIO Deployments

Bring the IOPS dude ! As a solutions architect working with MinIO storage solutions, I’ve seen firsthand the challenges that come with on-premises deployments using hard disk drives (HDDs). While HDDs may seem like a Read more

0 Comments